Databricks ML Runtime vs Snowpark ML

Chapter 2: Full Sprint: Databricks ML Runtime vs Snowpark ML

We initiated the comparison between DBR ML runtime and Snowpark ML by employing an openly accessible dataset from Kaggle. The data setup process will mirror the steps elucidated in the preceding chapter, including the exploratory data analysis (EDA) procedures. We would try to cover all possible aspects of a production-ready machine-learning pipeline.

Databricks steps:

Databricks provides jupyter like notebooks under its workspace for Data Scientists/ Analysts/ Engineers to perform data related experiments

Features which were evaluated as part of this comparison:

| MLFlow integration for keeping track of your experiments | Provides Revision History. |

| Real-time collaboration. | Library Explorer |

| LLM powered assistant. | Supports SQL, Python, PySpark, Scala |

| Data Catalog Browser. | Connection with local machine. |

| Supports commenting. |

1

To begin with we try to upload the data to DBFS and use the same by clicking a button 'Create Table in the Notebook'

2

A new notebook was created with auto generated code

3

This has automatically inferred the schema and created the dataframe.

4

We try to install a third-party library ot instal 'Sharp' library for model explainability

5

We also install native pyspark libraries needed for our pipeline

6

We can also impose the schema and create the dataframe for better control on data types

7

Now we are satisfied with the data

8

We also have options to create a view or table

9

Use sql to query the table by using the magic %sql inline

10

We can also create a table from the dataframe. Once saved, this table will persist across cluster restarts as well as allow various users across different notebooks to query this data

11

Since few column names have spaces, we would correct them

12

We then create a function to rename few columns and do some basic transformations eg from ‘Engine’ would extract the cubic capacity using regex and then transform into integer and so on for other columns

13

Our dataframe now has the following columns and datatypes

14

Post extraction of relevant details we would like to cast ‘Engine’, ‘Max-Power’ and ‘Max_Torque’ into integer

15

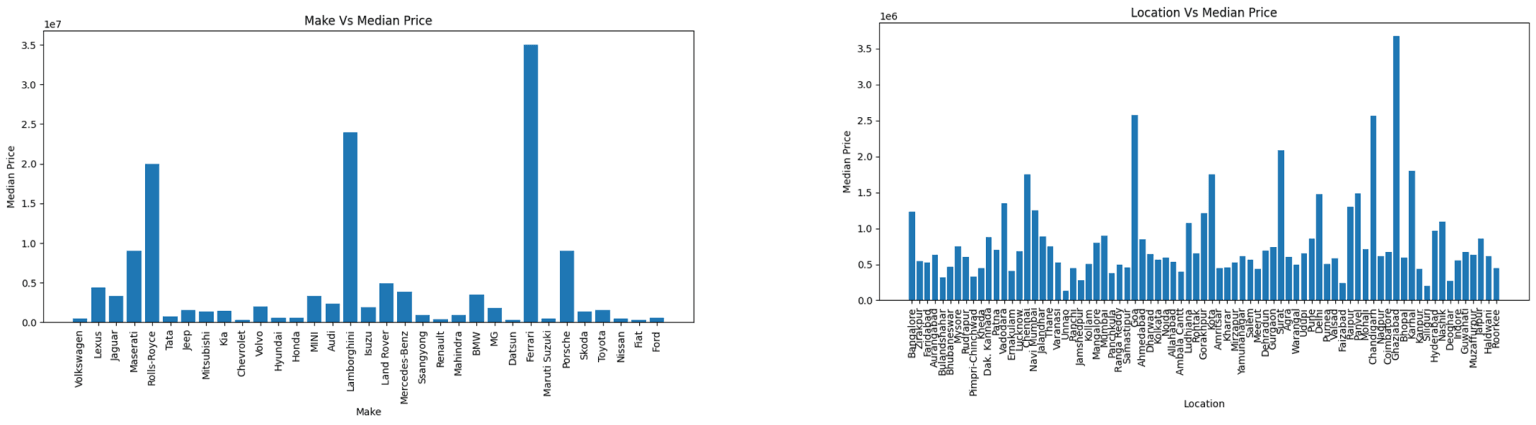

Databricks notebooks provide a platform for developers, including Data Scientists and Data Analysts, to carry out univariate and bivariate plotting. This can be accomplished by utilizing libraries such as Seaborn and Matplotlib to update the chart onthe notebook.

16

17

We also see some bivariate analysis

18

Some sample output images

19

20

21

Steps like data cleaning and preprocessing are pretty much straight forward while using databricks as we can use any third-party imputation tools.We can achieve feature engineering by building simple logics and can be executed with or without the help of a user defined function.

22

Further in order to do feature encoding databricks provides packages for categorical encoding in python as well as native pyspark.

23

Databricks offers an integrated feature store service designed for storing engineered features. These features can be efficiently utilized and shared among teams of Data Scientists. The platform supports both online and offline feature stores, catering to needs ranging from model training to inferencing (real-time, batch, and streaming). Alongside its impressive user interface, Databricks also provides users with valuable data lineage insights

24

25

A new notebook was created with auto generated code

26

In databricks we can train sklearn as well as spark’s MLlib models in the notebook interface. MLlib models are optimized to work on distributed data, making it a good choice for big data scenarios and perform distributed computations (This means they can handle data partitioning and processing across multiple nodes in a cluster). Using the data, we used native MLlib packages to run and compare models like RandomForest, Adaboost and GradientBoosting. The following function would create and run models using native spark libraries.

27

We can try similar modeling using sklearn packages in the same notebooks.

28

Databricks offers built-in support for parallel hyperparameter training through the 'SparkTrials' functionality, which integrates state-of-the-art HYPEROPT – a Bayesian optimization-backed hyperparameter tuning technique. Alternatively, libraries like OPTUNA can also be employed for customized hyperparameter tuning. We can use the output to compare against the best models

29

The parameters of the best model are

30

Databricks offers its own built-in MLFlow ‘Experimentation’ tab, readily available for tracking the data science lifecycle

31

We can see all the experiments/versions of the ML runs in the same ‘Experiments’ tab.

32

When it comes to model explanation, Databricks notebooks offer similar capabilities to any Jupyter notebook. The SHAP library can be employed to explain a machine learning model, and it also offers the possibility to enhance SHAP calculations using Pyspark and Pandas UDFs. Additionally, in Databricks, third-party libraries like LIME can also be utilized.

33

We can create shaply plots now for model explainability

34

A new notebook was created with auto generated code

35

In Databricks we get support for real-time, batch and streaming deployment strategies for any possible use cases. For the time being we have deployed a model as an endpoint for real time inferencing.

36

Databricks also provides a repos service with connection with multiple git providers for storing and versioning of code.

Snowpark steps -

Snowflake provides worksheets, out of the box for performing data-science activities and experimentations for a Data Scientist/ Analyst/ Engineer.

- Support for SQL and Python worksheets.

- Provides Revision History

- Data Explorer

- Pre-defined Package Explorer

- Connection to local machine (only way to use jupyter like interface)

Features

1

We can upload the data to Snowpark in the same way as we did in Scenario 1.

2

In Snowpark, users are limited to using the libraries pre-provided by the environment. To employ third-party packages, users must either establish a connection between their cluster and the local environment, utilizing the Jupyter Notebook interface, or navigate a cumbersome work-around as detailed in the article.

3

Worksheets does not provide a graphical output as they are py files and therefore users are left with the following choices: Connect to the local jupyter notebook. Save plots and view them manually outside a worksheet. Use the “chart” functionality of worksheets. The worksheets do provide a “chart” option to visualize data but are limited to only 4 kinds of in-built charts.

4

A new notebook was created with auto generated code

5

In Snowpark there is a potential issue to run the functions by pasting its name in settings and running. This annoying feature may lead to issues during development, and we tend to forget adding it. We have the capability to perform data cleansing with ease. The key distinction from Databricks is that the utilization of third-party packages within the worksheet could potentially pose an issue We can use a snowpark dataframe by using inbuilt libraries present in snowflake.ml.preprocessing else users can use the anaconda-python libraries. We have been able to successfully test by importing a lot of libraries.

6

We were able to write and test a lot of functions in order to test the differences between snowpark’s native dataframe and pandas dataframe. The two code samples use the same kind of transformations between snowpark dataframe and pandas dataframes and as follows with minor differences in using subsetting features of pandas(tempPdDf['ENGINE']) vs snowpark dataframe(with_column). Snowpark dataframe transformation code using UDF fix_values to get a clean modular code:

7

Similar differences were observed while trying to create a function for imputation ‘na’ as ‘Empty’. In snowpark dataframe as we need to use dictionary to pass the parameters eg: cat_column_dictonary = {feature:'Empty' for feature in feature_list[0]} while in pandas we can further concise If the data type of the provided replacement value does not match the column data type, fillna will produce a warning and skip that column. This can be avoided by specifying for each column what the replacement value should be, using a dictionary

8

Another distinction found while trying to impute the median values with in-built ‘median’ function was that it returns a view which we need to collect and use transformation before extracting the final values. median(feature).as_(feature)).collect()[0].as_dict().get(feature)

9

The features like categorical and feature engineering were smooth and came through without issues. But there are differences in usages between pyspark vs pandas vs snowpark datasets

10

11

Snowpark has support for feature-store based on the link and link however this was not found to be a part of snowsight/worksheets and only available while doing local development. This is in contrast to what we have explored as a part of Databricks.

12

In snowpark we can train sklearn and models provided by snowflake’s ml modeling package - link

13

Snowpark's worksheet is limited to using hyperopt but this is limited to use only with pandas dataframe and we could not use it with snowpark native dataframe. The installation of third-party libraries like OPTUNA can prove to be cumbersome. Moreover, built-in support for parallel/distributed hyperparameter tuning is absent. Nonetheless, achieving this can be done through an extended approach. In this approach we need to create a UDTF (User Defined Table Functions) as compared to Databricks where we used a function with hyperopt with pyspark.

14

Based on the approach we have been able to also try distributed hyperparameter tuning but could not use snowpark native dataframe and had to change to pandas with one model RandomForestRegressor but with a range of hyperparameters and the result was satisfactory.

15

While trying out MLflow we found that Snowpark leverages mlflow via snowpark/anaconda which means we can use MLflow to log training jobs from python stored procedure. Reference to link1 and link 2. But this will still use Azure for experiment tracking and to see the model artifacts/ runs and their corresponding metrics. Since we were interested in only trying to use a snowpark dataframe we did not delve deeper into this as pyspark Mllib has native support for MLflow.

16

In worksheets users can utilize the SHAP libraries to perform calculations, however, to see the plots users need to save the plot and view manually. To avoid that, users can use the support for connection with the local environment to run jupyter notebook on VS Code and view plots in real-time.

17

Models can only be deployed for batch inferencing and there is no support for real-time or streaming deployments. For batch deployment, there were two approaches which were discovered:

18

We can train the model locally, upload it to a stage and load it from the stage when the UDF is called.

19

20

In snowpark we can train sklearn and models provided by snowflake's ml modeling package - link

21

We can see a ‘procedure definition’ being available which shows the underlying code used to convert the model output to SPROC.

22

Git support is not available on snowflake’s Snowpark, unlike repos on Databricks the team could not find any direct support for git on the environment. For snowpark deployment we saw a newly available Snowpark Container Service from the link.Snowpark Container Services (SPCS) is a fully managed container offering that allows you to easily deploy, manage, and scale containerized services, jobs, and functions, all within the security and governance boundaries of Snowflake, and requiring zero data movement. Since this was only available to a subset of customers as a private view, we could not explore it further. Note: Using and connecting with a local Jupyter notebook on a platform such as VS Code we can overcome this issue and push code to GitHub from our local machine.

Conclusion

Databricks simplifies the process of swiftly uploading and analyzing CSV files. Notebooks offer an intuitive interface and the ability to leverage various popular Python plotting libraries.

In contrast, Snowflake necessitates the initial creation of tables before data upload, introducing multiple steps just to begin. Worksheets are less user-friendly when it comes to handling multiple code blocks, representing a significant departure from the favored user experience of notebooks. The extent to which plotting libraries can be utilized remains uncertain, often leading to challenges that are hard to resolve.

| Tasks | Snowpark (dataframe) | Databricks (pyspark dataframe) |

|---|---|---|

| Data Loading | Creating a table is necessary before uploading a dataset. | Transferring the dataset from the local file system to the Databricks File System (DBFS). Creation of table is optional |

| Environment Setup | Limited to existing libraries, cannot directly install new libraries in worksheets. Workaround available. | It's straightforward to install any required library without encountering difficulties. |

| Data Cleaning |  | |

| EDA | Univariate, Bivariate and Multivariate | Unable to plot graphs, need to use the worksheet's chart feature which has limited plots. | Able to do end to end EDA with libraries such as seaborn and matplotlib on Databricks notebook. |

| Data Transformation | | |

| Null Value Imputation | | |

| Categorical Encoding | | |

| Feature Engineering | | |

| Feature Store | Feature store not natively available. External services required in the workaround. | In-house feature store is available. |

| Train Test Split | | |

| Model Training | Supports snowflake.ml.modeling, sklearn, tensorflow, pytorch models. | Supports Spark MLlib, tensorflow, pytorch models. |

| Auto ML | No support for auto ML | Provides in-built auto ML service for building automated machine learning experiments. |

| Hyperparameter Tuning | Distributed Hyperparameter Tuning | For snowflake.ml.modeling models, can utilize GridSearch or RandomSearch exclusively. Using other libraries like Sklearn and tensorflow, it offers the added benefit of hyperopt for hyperparameter tuning. | Have the capability to leverage hyperopt, Optuna, GridSearchCV, and RandomSearchCV for fine-tuning MLlib, sklearn or tensorflow models. Also support distributed/parallel hyperparameter tuning with hyperopt. |

| MLflow & Experiment Tracking. | No in-built MLflow support, can connect to external server such as AML experiments | Inbuilt MLflow experiment support. |

| Model Explainability | Supports SHAP but cannot generate plots on worksheets. | Supports SHAP and lime with support for distributed SHAP. |

| Model Deployment on an endpoint | Deployment services are currently in private preview as Snowpark Container Services. | Available using Databricks model serving. |

| Model Deployment | Batch deployments available | Available using Databricks model serving. |

| LLMs | No inbuilt LLM, can support open source | Available using Databricks model serving. |